Sentinel Hub Statistical API

This notebook shows how to use Sentinel Hub Statistical API to obtain aggregated statistical satellite data for areas of interest. For more information about Statistical API please check the official service documentation.

Prerequisites

Imports

The tutorial requires additional packages geopandas, matplotlib, and seaborn which are not dependencies of sentinelhub-py.

[1]:

%matplotlib inline

import geopandas as gpd

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

from sentinelhub import (

CRS,

BBox,

DataCollection,

Geometry,

SentinelHubStatistical,

SentinelHubStatisticalDownloadClient,

SHConfig,

parse_time,

)

Credentials

Process API requires Sentinel Hub account. Please check configuration instructions about how to set up your Sentinel Hub credentials.

[2]:

config = SHConfig()

if not config.sh_client_id or not config.sh_client_secret:

print("Warning! To use Statistical API, please provide the credentials (OAuth client ID and client secret).")

Helper function

A helper function that will be used in this tutorial.

[3]:

def stats_to_df(stats_data):

"""Transform Statistical API response into a pandas.DataFrame"""

df_data = []

for single_data in stats_data["data"]:

df_entry = {}

is_valid_entry = True

df_entry["interval_from"] = parse_time(single_data["interval"]["from"]).date()

df_entry["interval_to"] = parse_time(single_data["interval"]["to"]).date()

for output_name, output_data in single_data["outputs"].items():

for band_name, band_values in output_data["bands"].items():

band_stats = band_values["stats"]

if band_stats["sampleCount"] == band_stats["noDataCount"]:

is_valid_entry = False

break

for stat_name, value in band_stats.items():

col_name = f"{output_name}_{band_name}_{stat_name}"

if stat_name == "percentiles":

for perc, perc_val in value.items():

perc_col_name = f"{col_name}_{perc}"

df_entry[perc_col_name] = perc_val

else:

df_entry[col_name] = value

if is_valid_entry:

df_data.append(df_entry)

return pd.DataFrame(df_data)

Make a Statistical API request

In the Process API tutorial, we have seen how to obtain satellite imagery. Statistical API can be used in a very similar way. The main difference is that the results of Statistical API are aggregated statistical values of satellite data instead of entire images. In many use cases, such values are all that we need. By using Statistical API we can avoid downloading and processing large amounts of satellite data.

Let’s take the same bounding box of Betsiboka Estuary, used in Process API tutorial, and make a Statistical API request.

[4]:

betsiboka_bbox = BBox((46.16, -16.15, 46.51, -15.58), CRS.WGS84)

rgb_evalscript = """

//VERSION=3

function setup() {

return {

input: [

{

bands: [

"B02",

"B03",

"B04",

"dataMask"

]

}

],

output: [

{

id: "rgb",

bands: ["R", "G", "B"]

},

{

id: "dataMask",

bands: 1

}

]

}

}

function evaluatePixel(samples) {

return {

rgb: [samples.B04, samples.B03, samples.B02],

dataMask: [samples.dataMask]

};

}

"""

rgb_request = SentinelHubStatistical(

aggregation=SentinelHubStatistical.aggregation(

evalscript=rgb_evalscript,

time_interval=("2020-06-07", "2020-06-13"),

aggregation_interval="P1D",

size=(631, 1047),

),

input_data=[SentinelHubStatistical.input_data(DataCollection.SENTINEL2_L1C, maxcc=0.8)],

bbox=betsiboka_bbox,

config=config,

)

The following will send the request to Sentinel Hub service and obtain results.

[5]:

%%time

rgb_stats = rgb_request.get_data()[0]

rgb_stats

CPU times: user 55.3 ms, sys: 10.3 ms, total: 65.6 ms

Wall time: 20.3 s

[5]:

{'data': [{'interval': {'from': '2020-06-07T00:00:00Z',

'to': '2020-06-08T00:00:00Z'},

'outputs': {'rgb': {'bands': {'R': {'stats': {'min': 0.00419999985024333,

'max': 0.7027999758720398,

'mean': 0.1124257412122722,

'stDev': 0.04222600867954154,

'sampleCount': 660657,

'noDataCount': 0}},

'B': {'stats': {'min': 0.054999999701976776,

'max': 0.5311999917030334,

'mean': 0.10198085449414412,

'stDev': 0.01494034416514153,

'sampleCount': 660657,

'noDataCount': 0}},

'G': {'stats': {'min': 0.030400000512599945,

'max': 0.5981000065803528,

'mean': 0.09885213961499702,

'stDev': 0.019804562978698967,

'sampleCount': 660657,

'noDataCount': 0}}}}}},

{'interval': {'from': '2020-06-12T00:00:00Z', 'to': '2020-06-13T00:00:00Z'},

'outputs': {'rgb': {'bands': {'R': {'stats': {'min': 0.004600000102072954,

'max': 0.7160000205039978,

'mean': 0.11546704480268109,

'stDev': 0.06332157724593372,

'sampleCount': 660657,

'noDataCount': 0}},

'B': {'stats': {'min': 0.05920000001788139,

'max': 0.5658000111579895,

'mean': 0.11913311262009575,

'stDev': 0.04636540384197817,

'sampleCount': 660657,

'noDataCount': 0}},

'G': {'stats': {'min': 0.03779999911785126,

'max': 0.6126000285148621,

'mean': 0.11290437308774512,

'stDev': 0.048338260231641964,

'sampleCount': 660657,

'noDataCount': 0}}}}}}],

'status': 'OK'}

We obtained statistical data for pixels for each band and for both available acquisition dates.

Multiple requests for a collection of geometries

The real value of Statistical API shows for use cases where we have a large collection of small geometries, sparsely distributed over a large geographical area, and we would like to obtain statistical information for each of them.

In this example, we will take 4 small polygons, each marking a different land cover type, and collect NDVI values for each of them.

[6]:

polygons_gdf = gpd.read_file("data/statapi_test.geojson")

polygons_gdf

[6]:

| land_type | geometry | |

|---|---|---|

| 0 | water | POLYGON ((411873.909 5126285.627, 412036.018 5... |

| 1 | forest | POLYGON ((451909.515 5087408.351, 452291.478 5... |

| 2 | agricultural | POLYGON ((553495.985 5137450.826, 554130.662 5... |

| 3 | urban | POLYGON ((548010.445 5156156.292, 549867.483 5... |

For each polygon, we define a Statistical API request. Requested statistical data will be calculated on 10-meter resolution and aggregated per day with an available acquisition. We will also request histogram values.

[7]:

yearly_time_interval = "2020-01-01", "2020-12-31"

ndvi_evalscript = """

//VERSION=3

function setup() {

return {

input: [

{

bands: [

"B04",

"B08",

"dataMask"

]

}

],

output: [

{

id: "ndvi",

bands: 1

},

{

id: "dataMask",

bands: 1

}

]

}

}

function evaluatePixel(samples) {

return {

ndvi: [index(samples.B08, samples.B04)],

dataMask: [samples.dataMask]

};

}

"""

aggregation = SentinelHubStatistical.aggregation(

evalscript=ndvi_evalscript, time_interval=yearly_time_interval, aggregation_interval="P1D", resolution=(10, 10)

)

input_data = SentinelHubStatistical.input_data(DataCollection.SENTINEL2_L2A)

histogram_calculations = {"ndvi": {"histograms": {"default": {"nBins": 20, "lowEdge": -1.0, "highEdge": 1.0}}}}

ndvi_requests = []

for geo_shape in polygons_gdf.geometry.values:

request = SentinelHubStatistical(

aggregation=aggregation,

input_data=[input_data],

geometry=Geometry(geo_shape, crs=CRS(polygons_gdf.crs)),

calculations=histogram_calculations,

config=config,

)

ndvi_requests.append(request)

Instead of triggering download for each request separately, we can pass request objects together to a download client object, which will execute them in parallel using multiple threads. This way the download process will be faster.

[8]:

%%time

download_requests = [ndvi_request.download_list[0] for ndvi_request in ndvi_requests]

client = SentinelHubStatisticalDownloadClient(config=config)

ndvi_stats = client.download(download_requests)

len(ndvi_stats)

CPU times: user 113 ms, sys: 16.5 ms, total: 130 ms

Wall time: 16.1 s

[8]:

4

Let’s convert this data into a tabular form by transforming it into a pandas dataframe.

[9]:

ndvi_dfs = [stats_to_df(polygon_stats) for polygon_stats in ndvi_stats]

for df, land_type in zip(ndvi_dfs, polygons_gdf["land_type"].values):

df["land_type"] = land_type

ndvi_df = pd.concat(ndvi_dfs)

ndvi_df

[9]:

| interval_from | interval_to | ndvi_B0_min | ndvi_B0_max | ndvi_B0_mean | ndvi_B0_stDev | ndvi_B0_sampleCount | ndvi_B0_noDataCount | land_type | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 2020-01-01 | 2020-01-02 | -0.043547 | 0.078686 | 0.035990 | 0.014587 | 9174 | 4322 | water |

| 1 | 2020-01-04 | 2020-01-05 | 0.013191 | 0.115481 | 0.059611 | 0.015508 | 9174 | 4322 | water |

| 2 | 2020-01-06 | 2020-01-07 | -1.000000 | 0.812500 | -0.203617 | 0.222675 | 9174 | 4322 | water |

| 3 | 2020-01-09 | 2020-01-10 | -0.964912 | 0.962963 | -0.029157 | 0.281115 | 9174 | 4322 | water |

| 4 | 2020-01-11 | 2020-01-12 | -1.000000 | 0.947368 | -0.032308 | 0.239918 | 9174 | 4322 | water |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 140 | 2020-12-18 | 2020-12-19 | -0.087464 | 0.067416 | -0.006859 | 0.016857 | 43263 | 11755 | urban |

| 141 | 2020-12-21 | 2020-12-22 | -0.072165 | 0.042030 | -0.011940 | 0.009287 | 43263 | 11755 | urban |

| 142 | 2020-12-23 | 2020-12-24 | -0.068307 | 0.718450 | 0.224862 | 0.123213 | 43263 | 11755 | urban |

| 143 | 2020-12-26 | 2020-12-27 | -0.175758 | 0.994366 | 0.334077 | 0.200383 | 43263 | 11755 | urban |

| 144 | 2020-12-28 | 2020-12-29 | -0.047584 | 0.093174 | 0.034037 | 0.016839 | 43263 | 11755 | urban |

505 rows × 9 columns

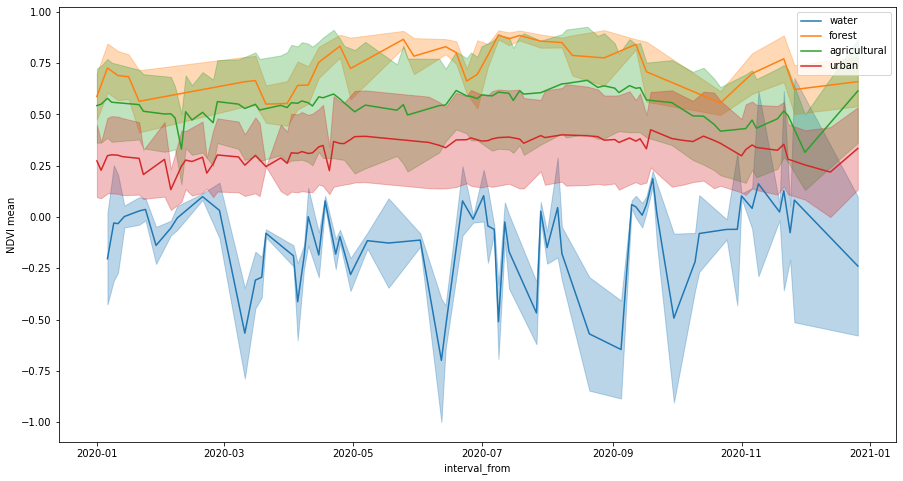

The following plot shows time series of mean values, buffered by standard deviation values.

[10]:

fig, ax = plt.subplots(figsize=(15, 8))

ax.set_ylabel("NDVI mean")

for idx, land_type in enumerate(polygons_gdf["land_type"].values):

series = ndvi_df[ndvi_df["land_type"] == land_type]

series.plot(ax=ax, x="interval_from", y="ndvi_B0_mean", color=f"C{idx}", label=land_type)

ax.fill_between(

series.interval_from.values,

series["ndvi_B0_mean"] - series["ndvi_B0_stDev"],

series["ndvi_B0_mean"] + series["ndvi_B0_stDev"],

color=f"C{idx}",

alpha=0.3,

);

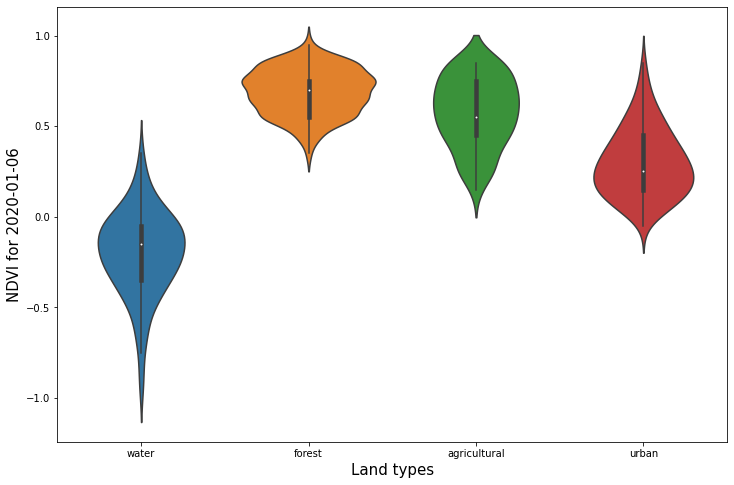

Let’s also plot histograms for a certain timestamp.

[11]:

TIMESTAMP_INDEX = 2

plot_data = []

timestamp = None

for stats in ndvi_stats:

bins = stats["data"][TIMESTAMP_INDEX]["outputs"]["ndvi"]["bands"]["B0"]["histogram"]["bins"]

timestamp = stats["data"][TIMESTAMP_INDEX]["interval"]["from"].split("T")[0]

counts = [value["count"] for value in bins]

total_counts = sum(counts)

counts = [round(100 * count / total_counts) for count in counts]

bin_size = bins[1]["lowEdge"] - bins[0]["lowEdge"]

splits = [value["lowEdge"] + bin_size / 2 for value in bins]

data = []

for count, split in zip(counts, splits):

data.extend([split] * count)

plot_data.append(np.array(data))

fig, ax = plt.subplots(figsize=(12, 8))

ax = sns.violinplot(data=plot_data, ax=ax)

ax.set(xticklabels=polygons_gdf["land_type"].values)

plt.xlabel("Land types", fontsize=15)

plt.ylabel(f"NDVI for {timestamp}", fontsize=15);

Reduce service processing costs

In case of large-scale processing, it becomes important, how many processing units we spend making Statistical API requests. We can decrease this number if we write an evalscript that outputs integer values instead of floats.

In this example, we will download statistics for all Sentinel-2 L2A bands, cloud masks and probabilities, and a few remote sensing indices. Such a collection of values would typically be used as an input to a machine learning model.

The following evalscript will:

request band values in digital numbers, instead of reflectances,

use

toUINTfunction to convert values from indices into integers that will fit intoSampleType.UINT16,mask pixels for which cloud mask

CLMindicates that they contain clouds. Such pixels will not be included in statistics.

[12]:

with open("./data/statapi_evalscript.js", "r") as fp:

features_evalscript = fp.read()

print(features_evalscript)

//VERSION=3

function setup() {

return {

input: [{

bands: ["B01", "B02", "B03", "B04", "B05", "B06", "B07", "B08", "B8A", "B09", "B11", "B12", "CLM", "CLP", "dataMask"],

units: "DN"

}],

output: [

{

id: "bands",

bands: ["B01", "B02", "B03", "B04", "B05", "B06", "B07", "B08", "B8A", "B09", "B11", "B12"],

sampleType: "UINT16"

},

{

id: "masks",

bands: ["CLM"],

sampleType: "UINT16"

},

{

id: "indices",

bands: ["NDVI", "NDVI_RE1", "NBSI", "CLP"],

sampleType: "UINT16"

},

{

id: "dataMask",

bands: 1

}]

}

}

function evaluatePixel(samples) {

// Normalised Difference Vegetation Index and variation

let NDVI = index(samples.B08, samples.B04);

let NDVI_RE1 = index(samples.B08, samples.B05);

// Bare Soil Index

let NBSI = index((samples.B11 + samples.B04), (samples.B08 + samples.B02));

// cloud probability normalized to interval [0, 1]

let CLP = samples.CLP / 255.0;

// masking cloudy pixels

let combinedMask = samples.dataMask

if (samples.CLM > 0) {

combinedMask = 0;

}

const f = 5000;

return {

bands: [samples.B01, samples.B02, samples.B03, samples.B04, samples.B05, samples.B06,

samples.B07, samples.B08, samples.B8A, samples.B09, samples.B11, samples.B12],

masks: [samples.CLM],

indices: [toUINT(NDVI, f), toUINT(NDVI_RE1, f), toUINT(NBSI, f), toUINT(CLP, f)],

dataMask: [combinedMask]

};

}

function toUINT(product, constant){

// Clamp the output to [-1, 10] and convert it to a UNIT16

// value that can be converted back to float later.

if (product < -1) {

product = -1;

} else if (product > 10) {

product = 10;

}

return Math.round(product * constant) + constant;

}

Let’s create requests for all 4 polygons. Additionally, we will request statistics for 5th, 50th and 95th percentiles.

[13]:

aggregation = SentinelHubStatistical.aggregation(

evalscript=features_evalscript, time_interval=yearly_time_interval, aggregation_interval="P1D", resolution=(10, 10)

)

calculations = {"default": {"statistics": {"default": {"percentiles": {"k": [5, 50, 95]}}}}}

features_requests = []

for geo_shape in polygons_gdf.geometry.values:

request = SentinelHubStatistical(

aggregation=aggregation,

input_data=[SentinelHubStatistical.input_data(DataCollection.SENTINEL2_L2A)],

geometry=Geometry(geo_shape, crs=CRS(polygons_gdf.crs)),

calculations=calculations,

config=config,

)

features_requests.append(request)

[14]:

%%time

download_requests = [request.download_list[0] for request in features_requests]

client = SentinelHubStatisticalDownloadClient(config=config)

features_stats = client.download(download_requests)

len(features_stats)

CPU times: user 123 ms, sys: 5.85 ms, total: 129 ms

Wall time: 27.5 s

[14]:

4

Let’s convert service response into a dataframe. Compared to the previous example this dataframe will have more columns, as we requested more types of features, and fewer rows, as in some cases all pixels contained clouds and were masked.

[15]:

features_dfs = [stats_to_df(polygon_stats) for polygon_stats in features_stats]

for df, land_type in zip(features_dfs, polygons_gdf["land_type"].values):

df["land_type"] = land_type

features_df = pd.concat(features_dfs)

features_df

[15]:

| interval_from | interval_to | indices_NDVI_min | indices_NDVI_max | indices_NDVI_mean | indices_NDVI_stDev | indices_NDVI_sampleCount | indices_NDVI_noDataCount | indices_NDVI_percentiles_5.0 | indices_NDVI_percentiles_95.0 | ... | bands_B12_min | bands_B12_max | bands_B12_mean | bands_B12_stDev | bands_B12_sampleCount | bands_B12_noDataCount | bands_B12_percentiles_5.0 | bands_B12_percentiles_95.0 | bands_B12_percentiles_50.0 | land_type | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2020-01-06 | 2020-01-07 | 0.0 | 9063.0 | 3981.917972 | 1113.380383 | 9174 | 4322 | 1887.0 | 5607.0 | ... | 0.0 | 117.0 | 30.087799 | 15.364080 | 9174 | 4322 | 5.0 | 55.0 | 31.0 | water |

| 1 | 2020-01-09 | 2020-01-10 | 175.0 | 9815.0 | 4854.220115 | 1405.574312 | 9174 | 4322 | 2444.0 | 6914.0 | ... | 14.0 | 207.0 | 60.070486 | 18.942562 | 9174 | 4322 | 35.0 | 90.0 | 58.0 | water |

| 2 | 2020-01-11 | 2020-01-12 | 0.0 | 9737.0 | 4838.451154 | 1199.583916 | 9174 | 4322 | 2766.0 | 6667.0 | ... | 0.0 | 236.0 | 26.004946 | 22.600604 | 9174 | 4322 | 4.0 | 58.0 | 21.0 | water |

| 3 | 2020-01-14 | 2020-01-15 | 4054.0 | 6590.0 | 5011.732165 | 266.857469 | 9174 | 4324 | 4574.0 | 5352.0 | ... | 62.0 | 1737.0 | 345.222062 | 361.152965 | 9174 | 4324 | 84.0 | 1177.0 | 133.0 | water |

| 4 | 2020-01-21 | 2020-01-22 | 3197.0 | 6420.0 | 5147.286480 | 335.922995 | 9174 | 4322 | 4514.0 | 5601.0 | ... | 6.0 | 1806.0 | 323.347279 | 289.699958 | 9174 | 4322 | 46.0 | 946.0 | 244.0 | water |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 76 | 2020-11-21 | 2020-11-22 | 3176.0 | 9725.0 | 6772.852958 | 1027.245684 | 43263 | 11755 | 5303.0 | 8617.0 | ... | 76.0 | 4110.0 | 1153.639711 | 402.932823 | 43263 | 11755 | 601.0 | 1895.0 | 1104.0 | urban |

| 77 | 2020-11-23 | 2020-11-24 | 4569.0 | 9331.0 | 6409.779460 | 856.631394 | 43263 | 20478 | 5240.0 | 8011.0 | ... | 362.0 | 4789.0 | 1558.450647 | 450.545776 | 43263 | 20478 | 928.0 | 2372.0 | 1509.0 | urban |

| 78 | 2020-12-01 | 2020-12-02 | 2071.0 | 9132.0 | 6269.664099 | 843.729608 | 43263 | 24436 | 5136.0 | 7889.0 | ... | 0.0 | 4830.0 | 1166.617942 | 410.184240 | 43263 | 24436 | 597.0 | 1894.0 | 1123.0 | urban |

| 79 | 2020-12-13 | 2020-12-14 | 345.0 | 9960.0 | 6094.379493 | 1095.370200 | 43263 | 42317 | 4966.0 | 8163.0 | ... | 111.0 | 3364.0 | 1268.115222 | 530.037701 | 43263 | 42317 | 447.0 | 2163.0 | 1271.0 | urban |

| 80 | 2020-12-26 | 2020-12-27 | 4121.0 | 9972.0 | 6670.385299 | 1001.918190 | 43263 | 11755 | 5267.0 | 8491.0 | ... | 68.0 | 4318.0 | 1064.905230 | 409.194536 | 43263 | 11755 | 494.0 | 1779.0 | 1021.0 | urban |

253 rows × 156 columns

Next, we can rescale values back to the correct scale. The following will:

convert statistical values for bands from digital numbers to reflectances,

apply an inverse transformation of

toUINTfunction on statistical values for indices.

[16]:

BANDS = DataCollection.SENTINEL2_L2A.bands

INDICES = ["NDVI", "NDVI_RE1", "NBSI", "CLP"]

STATISTICAL_QUANTITIES = ["mean", "min", "max", "stDev", "percentiles_5.0", "percentiles_50.0", "percentiles_95.0"]

for band in BANDS:

for stat in STATISTICAL_QUANTITIES:

column_name = f"bands_{band.name}_{stat}"

column = features_df[column_name]

column = column / 10000.0

features_df[column_name] = column

for index in INDICES:

for stat in STATISTICAL_QUANTITIES:

column_name = f"indices_{index}_{stat}"

column = features_df[column_name]

if stat == "stDev":

column = column / 5000.0

else:

column = (column - 5000.0) / 5000.0

features_df[column_name] = column

features_df

[16]:

| interval_from | interval_to | indices_NDVI_min | indices_NDVI_max | indices_NDVI_mean | indices_NDVI_stDev | indices_NDVI_sampleCount | indices_NDVI_noDataCount | indices_NDVI_percentiles_5.0 | indices_NDVI_percentiles_95.0 | ... | bands_B12_min | bands_B12_max | bands_B12_mean | bands_B12_stDev | bands_B12_sampleCount | bands_B12_noDataCount | bands_B12_percentiles_5.0 | bands_B12_percentiles_95.0 | bands_B12_percentiles_50.0 | land_type | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2020-01-06 | 2020-01-07 | -1.0000 | 0.8126 | -0.203616 | 0.222676 | 9174 | 4322 | -0.6226 | 0.1214 | ... | 0.0000 | 0.0117 | 0.003009 | 0.001536 | 9174 | 4322 | 0.0005 | 0.0055 | 0.0031 | water |

| 1 | 2020-01-09 | 2020-01-10 | -0.9650 | 0.9630 | -0.029156 | 0.281115 | 9174 | 4322 | -0.5112 | 0.3828 | ... | 0.0014 | 0.0207 | 0.006007 | 0.001894 | 9174 | 4322 | 0.0035 | 0.0090 | 0.0058 | water |

| 2 | 2020-01-11 | 2020-01-12 | -1.0000 | 0.9474 | -0.032310 | 0.239917 | 9174 | 4322 | -0.4468 | 0.3334 | ... | 0.0000 | 0.0236 | 0.002600 | 0.002260 | 9174 | 4322 | 0.0004 | 0.0058 | 0.0021 | water |

| 3 | 2020-01-14 | 2020-01-15 | -0.1892 | 0.3180 | 0.002346 | 0.053371 | 9174 | 4324 | -0.0852 | 0.0704 | ... | 0.0062 | 0.1737 | 0.034522 | 0.036115 | 9174 | 4324 | 0.0084 | 0.1177 | 0.0133 | water |

| 4 | 2020-01-21 | 2020-01-22 | -0.3606 | 0.2840 | 0.029457 | 0.067185 | 9174 | 4322 | -0.0972 | 0.1202 | ... | 0.0006 | 0.1806 | 0.032335 | 0.028970 | 9174 | 4322 | 0.0046 | 0.0946 | 0.0244 | water |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 76 | 2020-11-21 | 2020-11-22 | -0.3648 | 0.9450 | 0.354571 | 0.205449 | 43263 | 11755 | 0.0606 | 0.7234 | ... | 0.0076 | 0.4110 | 0.115364 | 0.040293 | 43263 | 11755 | 0.0601 | 0.1895 | 0.1104 | urban |

| 77 | 2020-11-23 | 2020-11-24 | -0.0862 | 0.8662 | 0.281956 | 0.171326 | 43263 | 20478 | 0.0480 | 0.6022 | ... | 0.0362 | 0.4789 | 0.155845 | 0.045055 | 43263 | 20478 | 0.0928 | 0.2372 | 0.1509 | urban |

| 78 | 2020-12-01 | 2020-12-02 | -0.5858 | 0.8264 | 0.253933 | 0.168746 | 43263 | 24436 | 0.0272 | 0.5778 | ... | 0.0000 | 0.4830 | 0.116662 | 0.041018 | 43263 | 24436 | 0.0597 | 0.1894 | 0.1123 | urban |

| 79 | 2020-12-13 | 2020-12-14 | -0.9310 | 0.9920 | 0.218876 | 0.219074 | 43263 | 42317 | -0.0068 | 0.6326 | ... | 0.0111 | 0.3364 | 0.126812 | 0.053004 | 43263 | 42317 | 0.0447 | 0.2163 | 0.1271 | urban |

| 80 | 2020-12-26 | 2020-12-27 | -0.1758 | 0.9944 | 0.334077 | 0.200384 | 43263 | 11755 | 0.0534 | 0.6982 | ... | 0.0068 | 0.4318 | 0.106491 | 0.040919 | 43263 | 11755 | 0.0494 | 0.1779 | 0.1021 | urban |

253 rows × 156 columns

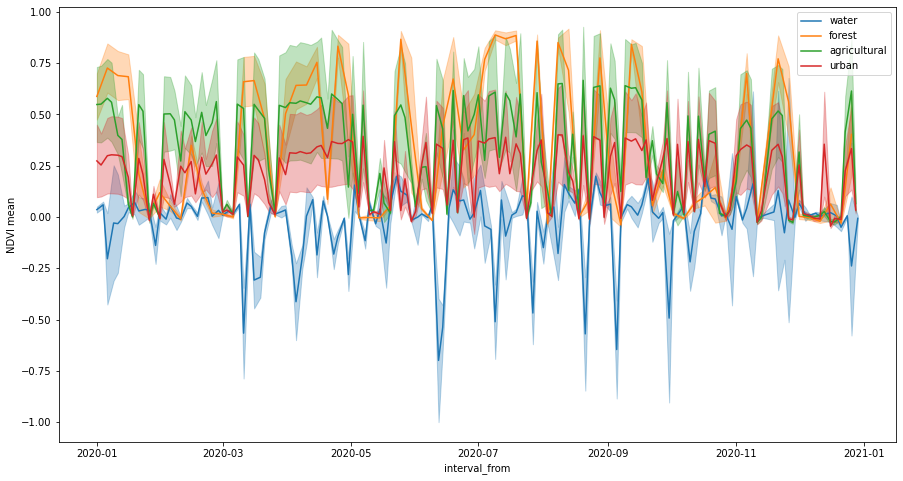

Let’s plot NDVI time series. The number of irregularities in the series has decreased because we masked out cloudy pixels.

[17]:

fig, ax = plt.subplots(figsize=(15, 8))

ax.set_ylabel("NDVI mean")

for idx, land_type in enumerate(polygons_gdf["land_type"].values):

series = features_df[features_df["land_type"] == land_type]

series.plot(ax=ax, x="interval_from", y="indices_NDVI_mean", color=f"C{idx}", label=land_type)

ax.fill_between(

series.interval_from.values,

series["indices_NDVI_mean"] - series["indices_NDVI_stDev"],

series["indices_NDVI_mean"] + series["indices_NDVI_stDev"],

color=f"C{idx}",

alpha=0.3,

);